GAMES Webinar 2021 – 187期(视觉专题) | Kai Zhang (Cornell University), Wenqi Xian (Cornell University), Songyou Peng (ETH Zurich & Max Planck Institute for Intelligent Systems), Xiuming Zhang (MIT CSAIL)

【GAMES Webinar 2021-187期】(视觉专题)

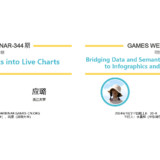

报告嘉宾1:Kai Zhang (Cornell University)

报告时间:2021年6月21号星期一晚上8:00-8:20(北京时间)

报告题目:NeRF++: Analyzing and Improving Neural Radiance Fields

报告摘要:

Neural Radiance Fields (NeRF) achieve impressive view synthesis results for a variety of capture settings, including 360 capture of bounded scenes and forward-facing capture of bounded and unbounded scenes. NeRF fits multi-layer perceptrons (MLPs) representing view-invariant opacity and view-dependent color volumes to a set of training images, and samples novel views based on volume rendering techniques. In this technical report, we first remark on radiance fields and their potential ambiguities, namely the shape-radiance ambiguity, and analyze NeRF’s success in avoiding such ambiguities. Second, we address a parametrization issue involved in applying NeRF to 360 captures of objects within large-scale, unbounded 3D scenes. Our method improves view synthesis fidelity in this challenging scenario.

讲者简介:

Kai Zhang is currently a Ph.D. candidate at Cornell Tech, Cornell University advised by Prof. Noah Snavely. He works in the area of 3D reconstruction, view synthesis, and inverse rendering from multi-view images. He has a particular interest in combining physics principles and deep learning. He got his bachelor degree from Tsinghua University.

个人主页:https://kai-46.github.io/website/

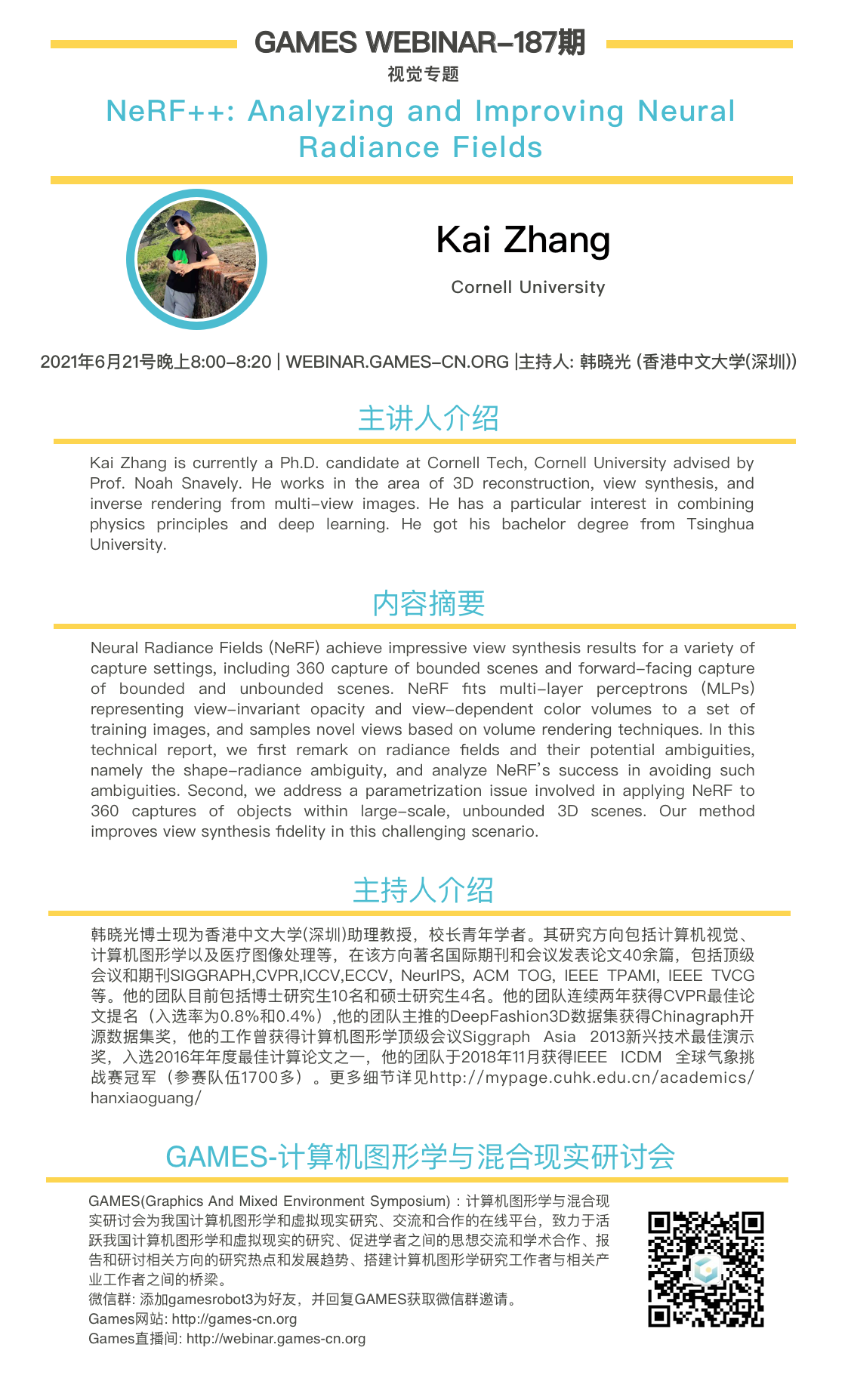

报告嘉宾2:Wenqi Xian (Cornell University)

报告时间:2021年6月21号星期一晚上8:20-8:40(北京时间)

报告题目:Space-time Neural Irradiance Fields for Free-Viewpoint Video

报告摘要:

We present a method that learns a spatiotemporal neural irradiance field for dynamic scenes from a single video. Our learned representation enables free-viewpoint rendering of the input video. Our method builds upon recent advances in implicit representations. Learning a spatiotemporal irradiance field from a single video poses significant challenges because the video contains only one observation of the scene at any point in time. The 3D geometry of a scene can be legitimately represented in numerous ways since varying geometry (motion) can be explained with varying appearance and vice versa. We address this ambiguity by constraining the time-varying geometry of our dynamic scene representation using the scene depth estimated from video depth estimation methods, aggregating contents from individual frames into a single global representation. We provide an extensive quantitative evaluation and demonstrate compelling free-viewpoint rendering results.

讲者简介:

She is a third-year PhD student in Computer Science at Cornell Tech, being advised by Noah Snavely. Her research interests lie at the intersection of Computer Vision, and Graphics, with a focus to democratize the content creation of videos and special effects. She has been a intern at Adobe and Facebook in the past summers.

个人主页:https://www.cs.cornell.edu/~wenqixian/

报告嘉宾3:Songyou Peng (ETH Zurich & Max Planck Institute for Intelligent Systems)

报告时间:2021年6月21号星期一晚上8:40-9:00(北京时间)

报告题目:Towards Practical Applications of NeRF

报告摘要:

NeRF has revolutionized the field of novel view synthesis by fitting a neural radiance field to RGB images. However, some challenges are still keeping NeRF from real-world applications. In this talk, we will discuss two challenges: a) NeRF has slow rendering times b) NeRF does not admit accurate surface reconstruction. Next, we will present our recent works to tackle these challenges. We will first introduce KiloNeRF on significant speed-ups for NeRF rendering, and then UNISURF on reconstructing accurate surfaces without any input masks. We believe that our works are an important step towards practical applications of NeRF.

Songyou Peng is a PhD student at ETH Zurich and Max Planck Institute for Intelligent Systems, as part of Max Planck ETH Center for Learning Systems. He is co-supervised by Marc Pollefeys and Andreas Geiger. His research interest lies in the intesection of 3D Vision and Deep Learning. More specifically, he is interested in applying neural implicit and explicit representations for the task of 3D reconstruction and novel scene synthesis.

讲者个人主页:https://people.inf.ethz.ch/sonpeng/

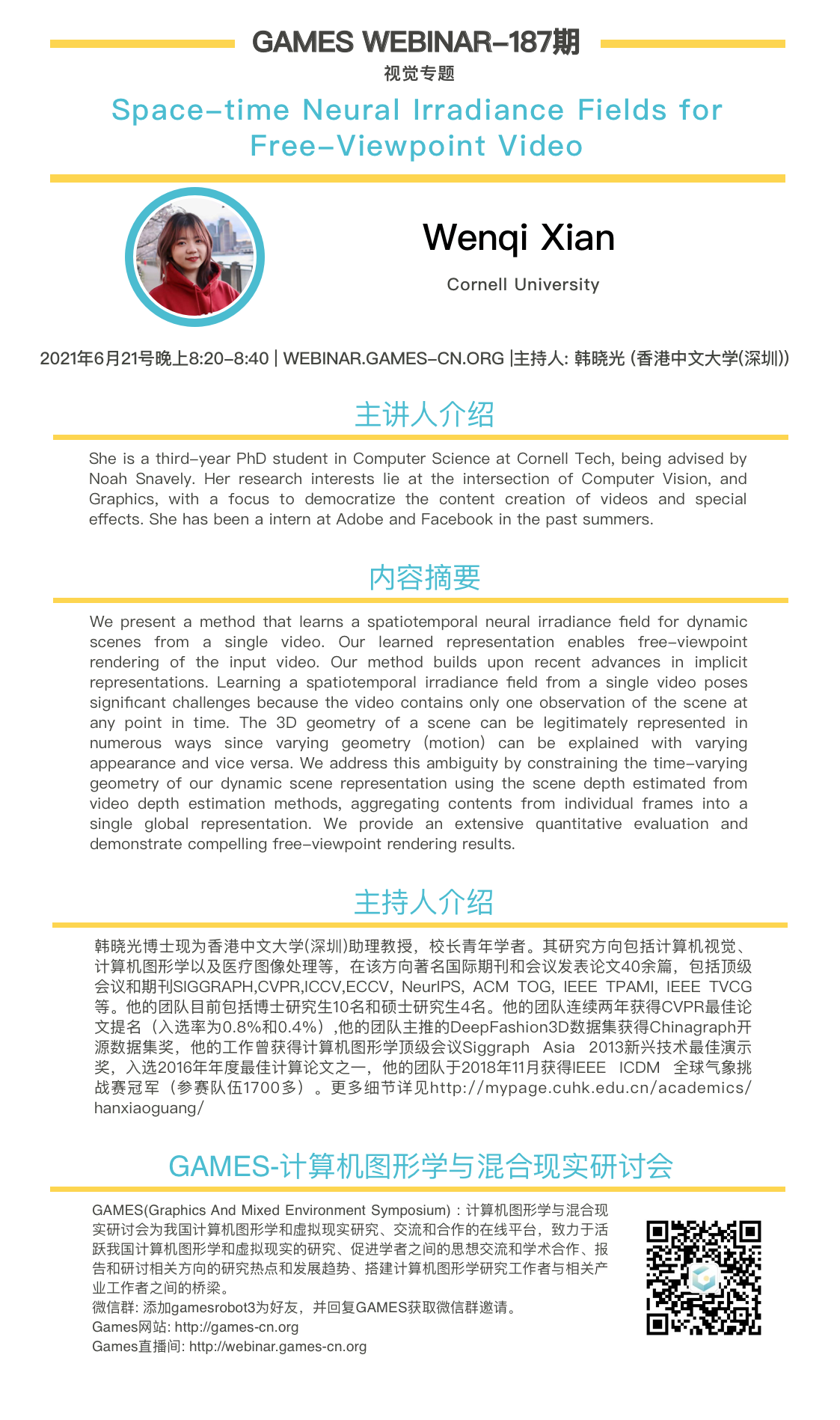

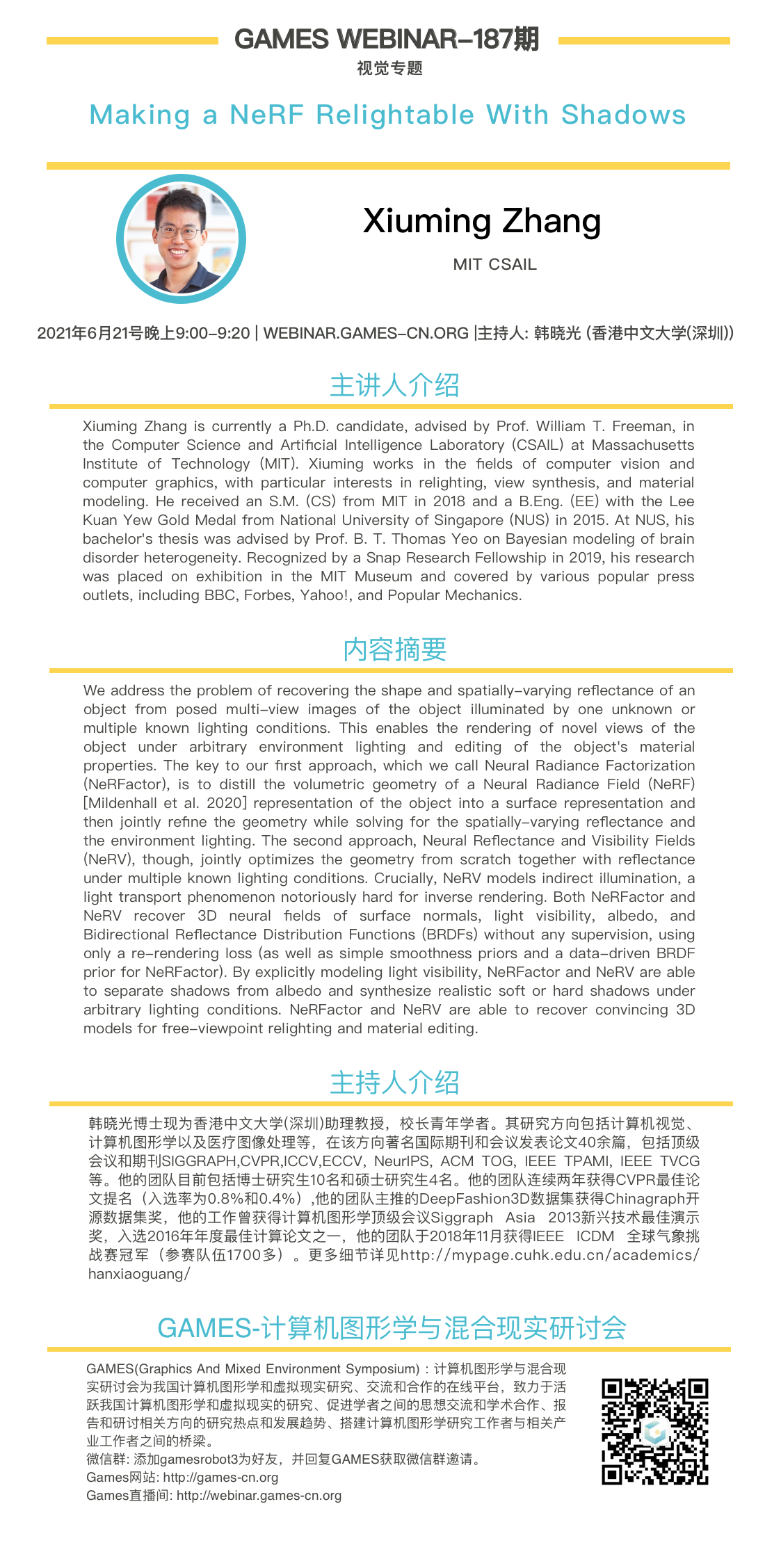

报告嘉宾4:Xiuming Zhang (MIT CSAIL)

报告时间:2021年6月21号星期一晚上9:00-9:20(北京时间)

报告题目:Making a NeRF Relightable With Shadows

报告摘要:

We address the problem of recovering the shape and spatially-varying reflectance of an object from posed multi-view images of the object illuminated by one unknown or multiple known lighting conditions. This enables the rendering of novel views of the object under arbitrary environment lighting and editing of the object’s material properties. The key to our first approach, which we call Neural Radiance Factorization (NeRFactor), is to distill the volumetric geometry of a Neural Radiance Field (NeRF) [Mildenhall et al. 2020] representation of the object into a surface representation and then jointly refine the geometry while solving for the spatially-varying reflectance and the environment lighting. The second approach, Neural Reflectance and Visibility Fields (NeRV), though, jointly optimizes the geometry from scratch together with reflectance under multiple known lighting conditions. Crucially, NeRV models indirect illumination, a light transport phenomenon notoriously hard for inverse rendering. Both NeRFactor and NeRV recover 3D neural fields of surface normals, light visibility, albedo, and Bidirectional Reflectance Distribution Functions (BRDFs) without any supervision, using only a re-rendering loss (as well as simple smoothness priors and a data-driven BRDF prior for NeRFactor). By explicitly modeling light visibility, NeRFactor and NeRV are able to separate shadows from albedo and synthesize realistic soft or hard shadows under arbitrary lighting conditions. NeRFactor and NeRV are able to recover convincing 3D models for free-viewpoint relighting and material editing.

Xiuming Zhang is currently a Ph.D. candidate, advised by Prof. William T. Freeman, in the Computer Science and Artificial Intelligence Laboratory (CSAIL) at Massachusetts Institute of Technology (MIT). Xiuming works in the fields of computer vision and computer graphics, with particular interests in relighting, view synthesis, and material modeling. He received an S.M. (CS) from MIT in 2018 and a B.Eng. (EE) with the Lee Kuan Yew Gold Medal from National University of Singapore (NUS) in 2015. At NUS, his bachelor’s thesis was advised by Prof. B. T. Thomas Yeo on Bayesian modeling of brain disorder heterogeneity. Recognized by a Snap Research Fellowship in 2019, his research was placed on exhibition in the MIT Museum and covered by various popular press outlets, including BBC, Forbes, Yahoo!, and Popular Mechanics.

讲者个人主页:http://people.csail.mit.edu/xiuming/index.html

主持人简介:

韩晓光博士现为香港中文大学(深圳)助理教授,校长青年学者。其研究方向包括计算机视觉、计算机图形学以及医疗图像处理等,在该方向著名国际期刊和会议发表论文40余篇,包括顶级会议和期刊SIGGRAPH,CVPR,ICCV,ECCV, NeurIPS, ACM TOG, IEEE TPAMI, IEEE TVCG等。他的团队目前包括博士研究生10名和硕士研究生4名。他的团队连续两年获得CVPR最佳论文提名(入选率为0.8%和0.4%),他的团队主推的DeepFashion3D数据集获得Chinagraph开源数据集奖,他的工作曾获得计算机图形学顶级会议Siggraph Asia 2013新兴技术最佳演示奖,入选2016年年度最佳计算论文之一,他的团队于2018年11月获得IEEE ICDM 全球气象挑战赛冠军(参赛队伍1700多)。更多细节详见http://mypage.cuhk.edu.cn/academics/hanxiaoguang/

GAMES主页的“使用教程”中有 “如何观看GAMES Webinar直播?”及“如何加入GAMES微信群?”的信息;

GAMES主页的“资源分享”有往届的直播讲座的视频及PPT等。

观看直播的链接:http://webinar.games-cn.org