GAMES Webinar 2018 -75期(Siggraph Asia 2018论文报告)| 高林(中国科学院计算技术研究所),弋力(斯坦福大学)

【GAMES Webinar 2018-75期(Siggraph Asia 2018论文报告)】

【GAMES Webinar 2018-75期(Siggraph Asia 2018论文报告)】

报告嘉宾1:高林,中国科学院计算技术研究所

报告时间:2018年11月29日 晚8:00-8:45(北京时间)

主持人:许威威,浙江大学(个人主页:http://www.cad.zju.edu.cn/home/weiweixu/weiweixu_en.htm)

报告题目:Automatic Unpaired Shape Deformation Transfer

报告摘要:

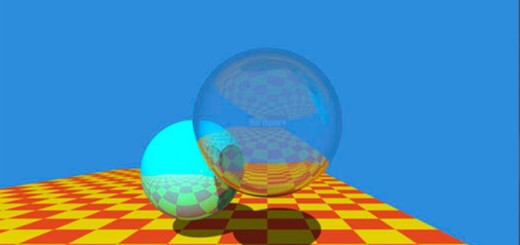

Transferring deformation from a source shape to a target shape is a very useful technique in computer graphics. State-of-the-art deformation transfer methods require either point-wise correspondences between source and target shapes, or pairs of deformed source and target shapes with corresponding deformations. However, in most cases, such correspondences are not available and cannot be reliably established using an automatic algorithm. Therefore, substantial user effort is needed to label the correspondences or to obtain and specify such shape sets. In this work, we propose a novel approach to automatic deformation transfer between two unpaired shape sets without correspondences. 3D deformation is represented in a high dimensional space. To obtain a more compact and effective representation, two convolutional variational autoencoders are learned to encode source and target shapes to their latent spaces. We exploit a Generative Adversarial Network (GAN) to map deformed source shapes to deformed target shapes, both in the latent spaces, which ensures the obtained shapes from the mapping are indistinguishable from the target shapes. This is still an under-constrained problem, so we further utilize a reverse mapping from target shapes to source shapes and incorporate cycle consistency loss, i.e. applying both mappings should reverse to the input shape. This VAE-Cycle GAN (VC-GAN) architecture is used to build a reliable mapping between shape spaces. Finally, a similarity constraint is employed to ensure the mapping is consistent with visual similarity, achieved by learning a similarity neural network that takes the embedding vectors from the source and target latent spaces and predicts the lightfield distance between the corresponding shapes. Experimental results show that our fully automatic method is able to obtain high-quality deformation transfer results with unpaired data sets, comparable or better than existing methods where strict correspondences are required.

讲者简介:

高林博士现任中科院计算所副研究员,硕士生导师,研究方向为数字几何处理、深度学习等,在清华大学获得工学博士学位,曾在德国亚琛工业大学进行公派访问研究。其入选中国科协青年人才托举工程、中科院计算所百星计划,获得中国计算机学会科学技术奖技术发明一等奖,中国仿真学会科技进步一等奖、计算所卓越之星奖等并主持多项国家自然科学基金。相关研究成果发表在领域内的顶级会议和期刊上,如ACM SIGGRAPH\TOG,IEEE TVCG,CVPR,AAAI,IEEE VR,Eurographics\CGF等。并指导本科生在CCF A类会议上发表多篇论文。

讲者个人主页:http://www.geometrylearning.com/

报告嘉宾2:弋力,斯坦福大学

报告时间:2018年11月29日 晚8:45-9:30(北京时间)

主持人:许威威,浙江大学(个人主页:http://www.cad.zju.edu.cn/home/weiweixu/weiweixu_en.htm)

报告题目:Deep Part Induction from Articulated Object Pairs

报告摘要:

Object functionality is often expressed through part articulation – as when the two rigid parts of a scissor pivot against each other to perform the cutting function. Such articulations are often similar across objects within the same functional category. In this paper we explore how the observation of different articulation states provides evidence for part structure and motion of 3D objects. Our method takes as input a pair of unsegmented shapes representing two different articulation states of two functionally related objects, and induces their common parts along with their underlying rigid motion. This is a challenging setting, as we assume no prior shape structure, no prior shape category information, no consistent shape orientation, the articulation states may belong to objects of different geometry, plus we allow inputs to be noisy and partial scans, or point clouds lifted from RGB images. Our method learns a neural network architecture with three modules that respectively propose correspondences, estimate 3D deformation flows, and perform segmentation. To achieve optimal performance, our architecture alternates between correspondence, deformation ow, and segmentation prediction iteratively in an ICP-like fashion. Our results demonstrate that our method significantly outperforms state-of-the-art techniques in the task of discovering articulated parts of objects. In addition, our part induction is object-class agnostic and successfully generalizes to new and unseen objects.

讲者简介:

Li Yi is a 6th year Ph.D. candidate at Stanford AI Lab, supervised by Prof. Leonidas Guibas. Before that, he received BSE from Tsinghua University. He has interned at Adobe Research and Baidu Research. His research interest is in 3D computer vision and shape analysis, with the goal of equipping robotic agent with the ability of understanding and interacting with the 3D world. He co-organized several academic events in the past including ShapeNet challenge 2017, VLEASE 2018 (ECCV 2018 workshop).

讲者个人主页:https://cs.stanford.edu/~ericyi/

GAMES主页的“使用教程”中有 “如何观看GAMES Webinar直播?”及“如何加入GAMES微信群?”的信息;

GAMES主页的“资源分享”有往届的直播讲座的视频及PPT等。

观看直播的链接:http://webinar.games-cn.org